Privacy by Design is all about being proactive. It’s a framework for weaving data protection into the very fabric of your systems, products, and processes right from the very beginning. Think of it less as a feature to be added later and more as a foundational philosophy. By treating privacy as an essential building block, you can head off data privacy issues before they even have a chance to surface.

What Privacy by Design Principles Really Mean

Imagine you're building a new house. Would you wait until the entire structure is finished before deciding where to put the locks and security cameras? Of course not. You'd bake those security measures—strong doors, secure windows, a solid foundation—directly into the original blueprint.

That’s exactly what Privacy by Design does for data. It's the blueprint for building digital experiences that people can actually trust. This framework is a fundamental shift away from reactive measures, like scrambling to patch a data breach after the damage is done. Instead, the focus is squarely on prevention, making privacy a non-negotiable requirement throughout the entire development lifecycle. The goal is to build systems where privacy isn't just an option a user has to hunt for; it’s the default setting.

The Shift from Reaction to Prevention

For years, many organizations treated privacy as just another compliance checkbox to tick off before a product launch. This often led to clunky, bolted-on solutions that created terrible user experiences and left gaping security holes. That reactive model isn't just expensive; it completely erodes user trust. A core part of adopting Privacy by Design is integrating robust software development security best practices to build a secure foundation from day one.

The Privacy by Design framework, originally developed by Dr. Ann Cavoukian, is built on seven core principles that guide this proactive mindset. These principles are a roadmap for anyone who handles user data, from:

- Digital Analysts who need to collect data ethically and purposefully.

- Marketers who want to build lasting customer relationships on a foundation of transparency and respect.

- Developers who are on the front lines, building the very systems that either protect or expose user information.

Privacy by Design is about creating a positive-sum game. You don't have to sacrifice great functionality for strong privacy. It’s about achieving both, which in turn leads to greater user confidence and tougher, more resilient systems.

Why This Framework Matters Now

In a world of ever-tightening data regulations and sharp consumer awareness, just meeting the legal requirements is the bare minimum. The real competitive advantage comes from earning—and keeping—your users' trust.

When you build with a privacy-first mindset, you’re doing more than just dodging fines. You're building a better, more sustainable product that truly respects its users. Making this commitment fosters loyalty and helps create a more responsible data ecosystem for everyone. For a deeper look at creating this kind of environment, exploring a modern privacy hub can offer valuable tools and insights. Ultimately, this proactive approach transforms privacy from a legal headache into a core business value.

The 7 Foundational Principles of Privacy by Design

Diving into the privacy by design principles isn't just about compliance; it’s about building a framework that earns genuine user trust. These seven principles, originally developed by privacy expert Dr. Ann Cavoukian, are less of a technical rulebook and more of a strategic mindset. They guide you in baking privacy into the very core of your operations, preventing problems before they ever start.

This proactive stance is catching on fast. The global data privacy software market was valued at a whopping $2.76 billion in 2023 and is expected to explode to $30.31 billion by 2030. That kind of growth sends a clear message: embedding privacy from the get-go isn't just good practice anymore—it's a business imperative. You can find more market insights over at Secureframe.com.

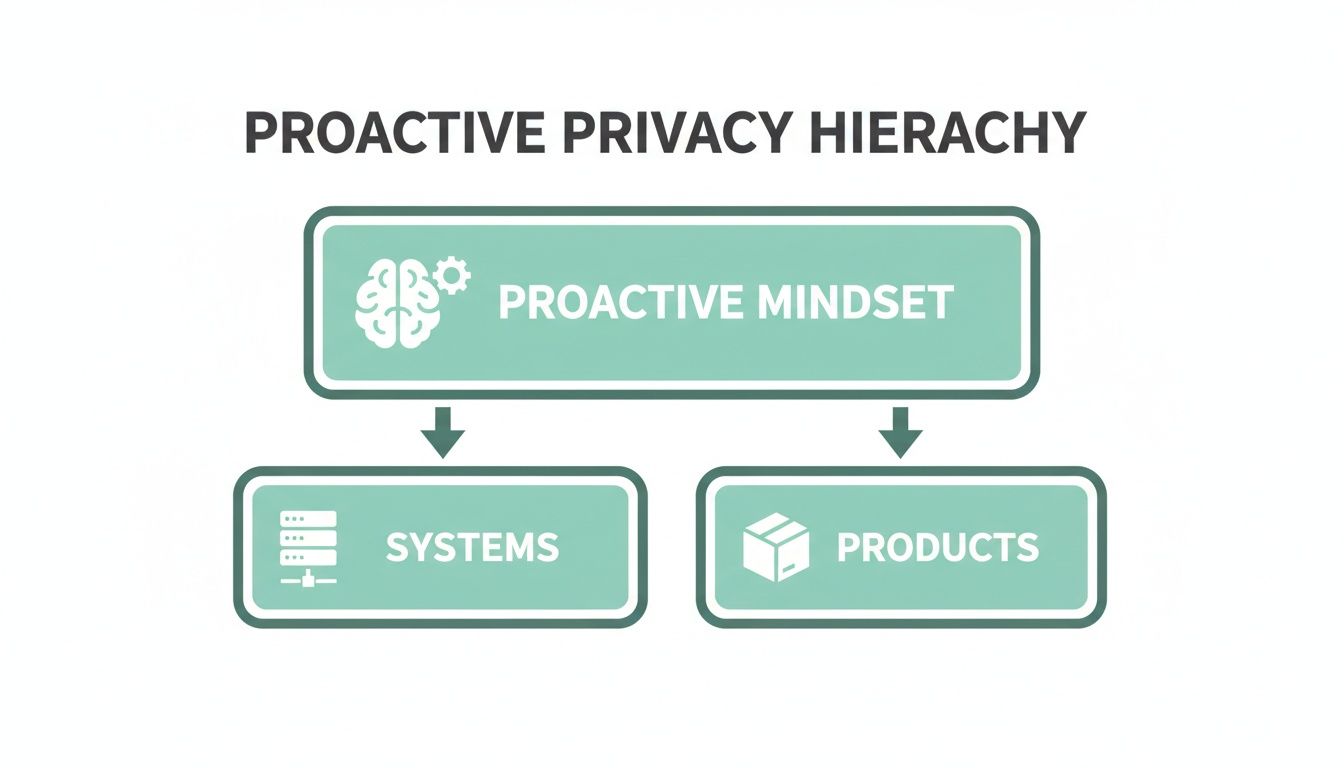

The hierarchy is simple: a proactive privacy mindset should be the bedrock upon which you build safer systems and products.

This visual drives home the point that a privacy-first culture must inform the technical architecture of everything you build, from internal systems to the products your users interact with every day.

To make these concepts easier to digest, here's a quick look at all seven principles and what they mean for your analytics and marketing stack.

The 7 Principles of Privacy by Design at a Glance

Now, let's unpack each of these principles to see how they translate into concrete actions for your team.

1. Proactive not Reactive; Preventative not Remedial

This first principle is the heart and soul of the whole framework. Instead of waiting for a data breach and scrambling to clean up the mess, you anticipate and block privacy risks from day one.

Think of it like getting a regular check-up. You visit the doctor to catch potential problems early, not when you're already in the emergency room. A preventative approach is always more effective—and far less costly—than crisis management.

What This Means for Your Analytics: Your team should be running Privacy Impact Assessments (PIAs) before you even think about launching a new feature or plugging in a new marketing tool. This means actively hunting for potential risks—like accidental PII collection—and designing safeguards before a single line of code gets shipped.

2. Privacy as the Default Setting

This is one of the most powerful and user-centric principles. It states that any system you build should offer the highest level of privacy protection right out of the box. No action required from the user.

Imagine getting a new phone where location tracking, ad personalization, and data sharing are all turned off by default. You, the user, have to consciously opt in to share more, not dig through settings to opt out. That's putting the user in the driver's seat.

Privacy as the Default means personal data is automatically protected in any given IT system or business practice. If an individual does nothing, their privacy still remains intact. No action is required on their part to protect their privacy — it is built into the system, by default.

What This Means for Your Analytics: On your website's cookie banner, all non-essential trackers for marketing and analytics should be disabled by default. Users must actively click "Accept" to turn them on, rather than being tricked into opting out of pre-checked boxes.

3. Privacy Embedded into Design

Privacy can't be an afterthought bolted on at the end of a project. It needs to be a fundamental part of the core architecture, woven into the system's DNA from the ground up.

It’s like designing a skyscraper to be earthquake-proof from the very first blueprint. The structural supports are part of the building’s skeleton, not just slapped onto the walls later. This makes the entire structure inherently stronger and safer.

What This Means for Your Analytics: When your team designs a new tracking event, the schema itself should be built with data minimization in mind. For example, if you only need an age range for cohort analysis, don't collect a user's full date of birth. That's a design-level choice that prevents over-collection from the start.

4. Full Functionality — Positive-Sum, not Zero-Sum

There's a common myth that privacy and functionality are enemies—that you have to sacrifice one to get the other. This principle kills that "zero-sum" idea. It challenges you to find creative solutions that deliver both strong privacy and a fantastic user experience.

A ride-sharing app, for instance, needs your location to work. But does it need to store your location history forever? Absolutely not. It can deliver full functionality while respecting privacy by deleting that data as soon as the ride is over.

What This Means for Your Analytics: You can absolutely get valuable business insights without creeping on your users. Instead of tracking every single visitor's individual journey, use aggregated and anonymized data to spot trends. You get the business value, and your users keep their privacy. It’s a win-win.

5. End-to-End Security — Lifecycle Protection

Data needs to be locked down for its entire journey—from the second it's collected to the moment it's securely deleted. Security isn't just about having a strong password; it’s about protecting data during collection, use, storage, and eventual disposal.

Think of it like a high-security courier. The package is kept safe from the moment it leaves your hands, all through transit, right up until it's delivered and signed for. A single vulnerability anywhere in that chain puts the entire delivery at risk.

What This Means for Your Analytics: This means implementing strong encryption for data both in transit (as it flies from a user's browser to your servers) and at rest (when it's sitting in your databases). It also means having automated data retention policies that securely wipe user data once it's no longer needed for its stated purpose.

6. Visibility and Transparency — Keep it Open

You have to be straight with your users about how their data is handled. That means clear, easy-to-read privacy policies, giving users access to their information, and being honest about your data practices.

Nobody wants to read a 40-page legal document. True transparency is about communicating in plain English and empowering users with knowledge. This is how you build the kind of trust that turns casual users into loyal advocates.

What This Means for Your Analytics: Your privacy policy shouldn't look like it was written by a team of lawyers for another team of lawyers. Use simple language to explain what data you collect, why you collect it, which third-party tools you share it with, and how people can exercise their rights.

7. Respect for User Privacy — Keep it User-Centric

Finally, the whole point is to put the user first. This means designing systems that are built around their interests, offering strong privacy defaults, clear choices, and controls that are actually easy to use. The goal is to give users real agency over their personal information.

Building user-centric systems ultimately creates a better, more trustworthy experience that fosters loyalty. A big part of this is knowing how to handle different kinds of sensitive information, a topic we explore more deeply in our guide on achieving PII data compliance.

What This Means for Your Analytics: Provide a simple, accessible user preference center where people can manage their consent settings without a headache. Don't make them jump through hoops to opt out or request their data. Keep it simple, intuitive, and respectful of their choices.

How to Apply These Principles to Your Data Stack

Knowing the seven foundational principles is one thing, but the real work starts when you translate that theory into practice. Applying privacy by design principles to your data stack isn't about bolting on another layer of complexity. It’s about making deliberate, privacy-first choices at every single stage of the data lifecycle.

This is how privacy stops being a static policy document collecting dust on a shelf and becomes a living, breathing part of your day-to-day operations.

Let's walk through how to put these principles into action across three key stages: data collection, data processing, and data activation. By grounding these concepts in the real world, you can build a more resilient and trustworthy data ecosystem from the ground up.

This is the end goal: turning abstract privacy ideas into a concrete system that protects user data across your entire tech stack.

Reinforcing Privacy at the Point of Data Collection

The best way to fix privacy issues is to prevent them from happening in the first place. Your first and most critical opportunity is right at the point of collection. This is where you enforce the principles of Data Minimization and Privacy as the Default Setting. The goal is to be surgical about what you collect and build an automated gatekeeper that blocks unwanted data before it even touches your systems.

Think of your data collection endpoint as a bouncer at an exclusive club. The bouncer has a strict guest list—your tracking plan or schema—and turns away anyone not on it. This is far more effective than trying to track down and kick out problematic data after it’s already inside and mingling with other systems.

Here’s how to put this into practice:

- Implement Strict Schema Validation: Define exactly what data you need for each event in a formal tracking plan. Use an automated QA or observability tool to validate every single incoming event against this schema in real time. If an event contains an unexpected property or PII (like an email in a

product_idfield), the system should block it on the spot. - Default Consent to "Off": Make sure your consent management platform (CMP) has all non-essential analytics and marketing trackers switched off by default. Users have to actively opt in to turn them on. This is a direct implementation of "Privacy as the Default" and builds trust right from the first interaction.

- Automate PII Detection: Human error is a given. Sooner or later, a developer might accidentally push code that captures sensitive form inputs. Automated PII detection scans incoming data for patterns like email addresses, phone numbers, or credit card info, alerting you instantly to stop a potential breach before it ever becomes one.

Securing Data During Processing and Storage

Once data is in the door, the focus shifts to protecting it throughout its entire lifecycle. This stage is all about bringing the principles of End-to-End Security and Privacy Embedded into Design to life. The key is to handle data responsibly, lock down access, and ensure user consent is respected programmatically at every turn.

By treating data processing not just as a technical step but as a critical privacy checkpoint, you ensure that user preferences are honored long after the initial interaction. This is where consent moves from a checkbox on a banner to a functional rule enforced by your architecture.

Here are a few techniques to minimize risk while data is in your custody:

- Pseudonymization and Anonymization: Whenever possible, replace direct identifiers with pseudonyms. For example, swap a

user_idlike "jane.doe@email.com" for a randomly generated string like "A83B-4C1F-9E2D". This lets you perform user-level analysis without storing PII in your analytics tools. - Enforce Consent Flags Programmatically: User consent isn't a one-and-done event; it's a dynamic state that all downstream systems must respect. Make sure consent flags (e.g.,

analytics_consent=denied) travel with the user data. Your systems should be built to automatically block data from flowing to tools like Google Analytics or marketing platforms if the right consent isn't there. - Implement Strong Encryption: All data must be encrypted both in transit (using TLS) as it moves from the user's device to your servers, and at rest when it's sitting in your databases or data warehouse. This is a foundational layer of security against unauthorized access.

Activating Data with Privacy Safeguards

The final stage, data activation, is where you use data to drive business decisions, personalize experiences, or run marketing campaigns. This is also where privacy controls often fall apart as data gets shared with countless third-party tools. Here, the principles of Respect for User Privacy and Visibility and Transparency are non-negotiable. For a masterclass in applying these principles, look at the rigorous standards involved in achieving HIPAA compliance, which sets a high bar for protecting sensitive information.

Your mission here is simple: ensure data is only used for its intended purpose and that access is strictly controlled.

- Configure Role-Based Access Control (RBAC): Not everyone in your company needs access to everything. Implement RBAC in your analytics platforms, data warehouses, and BI tools. A marketing intern, for instance, should only see aggregated campaign dashboards, not raw, event-level user data.

- Prevent Unauthorized Pixel Firing: Marketing pixels and third-party tags are notorious for data leakage. Use a continuous monitoring solution to catch when tags fire without valid user consent. An observability platform can alert you if a Facebook pixel, for example, activates for a user who opted out of marketing cookies, giving you an automated backstop against consent misconfigurations.

By systematically applying these privacy by design principles at each stage, you build a robust, multi-layered defense. This continuous verification turns your privacy policy from a set of rules into an automated, reliable, and enforceable system.

Common Privacy by Design Mistakes to Avoid

Even with the best intentions, it's easy to stumble when putting privacy by design principles into action. Adopting the philosophy is a great first step, but avoiding the common pitfalls is what really separates a paper policy from a resilient data ecosystem. A lot of teams fall into the trap of treating privacy as a one-and-done project.

That "set it and forget it" mindset is a huge mistake. The truth is, your data stack is a living, breathing system. It’s always evolving as you add new tools, update features, or as user behaviors shift. A privacy framework that was perfect six months ago might already have holes in it today.

This constant change is where the risk creeps in. Adding a new marketing analytics tool, a small code change from a developer, or a misconfigured third-party tag can quietly open the door to new vulnerabilities. This is often called tech stack drift, and it slowly erodes your original privacy design, exposing you to compliance headaches and data breaches.

The Myth of the One-Time Fix

One of the most common mistakes is treating privacy like a project with a start and end date. Teams will do a big audit, roll out a consent management platform, and figure the job is done. But that completely misses the dynamic nature of modern digital products.

A privacy-aware design is not a static blueprint but a living system. It requires ongoing observation and maintenance to remain effective against the constant pressures of human error and technological change.

A single forgotten setting in a new customer data platform (CDP) or an overlooked data flow to a partner can unravel all your hard work. The answer isn't to stop innovating; it's to accept that privacy is a continuous process, not a one-off task. This means shifting from periodic check-ups to constant monitoring.

Forgetting to Vet New Tools

Another classic pitfall is letting new marketing and analytics tools into the stack without a proper privacy check. In the rush to get the latest tech, teams often forget to look at how a new tool collects, processes, and shares user data. Every new integration is a potential weak spot.

- Unvetted Third-Party Scripts: A new advertising pixel could start collecting way more data than your privacy policy says it does.

- Misaligned Data Models: A shiny new analytics tool might not respect your data minimization rules, leading to over-collection.

- Consent Signal Blindness: A new tool might not be set up to listen to your CMP, leading it to process data without user consent.

The solution here is to build a clear, mandatory vetting process for any new tech that touches user data. This should include a privacy impact assessment and technical validation to make sure it plays by your rules before it ever gets near production.

The Disconnect Between Design and Reality

The biggest mistake of all is failing to check if your privacy design is actually working in the real world. Principles on a whiteboard are a great start, but they offer zero protection without enforcement and observation. This is exactly why, despite many companies claiming to follow Privacy by Design, 83% have suffered a data breach. With the average cost of a breach now at an all-time high of $4.88 million, it's clear that good intentions aren't enough. You can find more insights on the limits of design without monitoring over at Data Dynamics Inc.

To close this gap, you need a system for automated, continuous monitoring. This is what turns your privacy strategy from a theoretical model into an operational reality. It’s your safety net, catching deviations from your design in real-time—whether that's a PII leak, a consent misconfiguration, or an unapproved marketing tag firing off. This is how you operationalize your principles and ensure they’re enforced 24/7.

Using Observability to Enforce Your Privacy Rules

A privacy policy document is just a promise. A truly solid privacy framework needs real teeth, and that means enforcement. Even the most carefully designed system can drift over time thanks to human error, new tool integrations, or simple misconfigurations. This is where analytics observability steps in, acting as the enforcement layer for your privacy by design principles. It transforms your strategy from a static document into an automated, observable, and enforceable system.

Think of it as a 24/7 security guard for your data stack. Your privacy design is the blueprint for the building, but observability is the network of sensors and cameras ensuring no one props open a fire escape or leaves a window unlocked. It provides the continuous verification needed to turn principles into practice.

This constant watchfulness is what gives your privacy framework its power, catching deviations that even the most diligent teams would otherwise miss.

Real-Time PII Leak Detection

The principle of Privacy Embedded into Design demands that privacy be a core, inseparable component of your system. But developers are human, and accidents happen. A minor code change could inadvertently expose personally identifiable information (PII) in an analytics payload, creating a serious data breach.

This is where real-time PII leak detection is an absolute game-changer. An observability platform continuously scans all incoming data against predefined patterns for sensitive information like:

- Email addresses

- Phone numbers

- Credit card information

- Full names or physical addresses

When a leak is detected, the system sends an immediate alert. This gives your team the chance to contain the issue before it escalates. This automated monitoring is the practical enforcement of embedding privacy into your design, acting as an infallible safety net that catches what manual QA processes simply can't.

Consent Misconfiguration Alerts

Privacy as the Default is one of the most critical principles for building user trust. It means that non-essential data collection must be off by default, requiring explicit user consent to activate. But in a complex tag management setup, it’s frighteningly easy for a marketing tag to fire against user preferences.

A design is only as good as its implementation. Observability closes the gap between intention and reality, ensuring the privacy rules you designed are the rules your system actually follows, second by second.

Consent misconfiguration alerts directly enforce this principle. An observability tool can monitor the relationship between a user's consent status (provided by your Consent Management Platform) and the behavior of your marketing pixels. If a Facebook or Google Ads tag fires for a user who has denied consent, an alert is triggered instantly. This capability transforms "Privacy as the Default" from a setting on a cookie banner into a continuously enforced rule across your entire data stack. Understanding these dynamics is a cornerstone of any effective, automated marketing observability guide.

Schema Validation and Data Minimization

The principle of collecting only what is strictly necessary is fundamental to good privacy hygiene. Schema validation is the technical enforcement of this idea. By defining an explicit tracking plan—a "guest list" for your data—you declare exactly which events and properties are approved for collection.

An observability platform validates every single piece of incoming data against this schema.

- Blocking Rogue Events: If a developer accidentally instruments an unapproved event, it's immediately flagged.

- Preventing Property Mismatches: If an event arrives with unexpected properties or incorrect data types (like a user's email in a

product_IDfield), the system catches it.

This automated validation ensures your data collection remains lean and purposeful, preventing the "data bloat" that increases your risk profile. It makes data minimization an active, automated process rather than a passive guideline, turning your privacy design into a tangible, operational reality.

Unpacking Privacy by Design: Your Questions Answered

As teams start getting serious about data protection, a few common questions always pop up. Making the switch to a privacy-first mindset means getting clear on the core ideas, knowing the real-world limitations, and busting a few myths along the way.

This FAQ cuts straight to the chase, giving you clear answers to the most pressing questions teams have when putting the privacy by design principles into practice. The goal here is to give you practical advice you can run with, so you can move forward with confidence.

What’s the Difference Between Privacy by Design and Privacy by Default?

It’s really easy to get these two mixed up, but the distinction is crucial. Privacy by Design is the big-picture philosophy—the entire blueprint for building privacy into your systems, your culture, and your processes from the absolute beginning.

Privacy by Default, on the other hand, is just one of the seven foundational principles within that larger framework. It’s the specific rule that says any product or system’s default settings have to be the most private option. The user shouldn’t have to lift a finger to be protected; their privacy is locked down right out of the box.

Think of it like this: Privacy by Design is the decision to build a high-security fortress from the ground up. Privacy by Default is the standing order that the main gate is always locked unless someone with a key actively chooses to open it.

How Can Small Teams with Limited Resources Actually Implement This?

You don’t need a massive budget or a dedicated privacy engineering team to make this work. For smaller companies, the trick is to start with high-impact, low-cost moves and build from there.

The best place to begin is with clarity and documentation.

- Create a Data Dictionary: Your first step is simply to write down what data you collect, where it goes, and why you actually need it. This is a direct win for the Data Minimization principle.

- Establish a Simple Tracking Plan: Get specific about the events and properties you need to track. This is the best way to stop collecting "just-in-case" data that you’ll never use.

- Lean on Automated Tools: Instead of hiring a big team to do manual audits, use automated tools for the heavy lifting, like PII leak detection and consent validation. These solutions give you a ton of protection and act as a force multiplier for a small team.

Honestly, the most important step is just making privacy a conscious part of your workflow from day one.

Does Privacy by Design Kill Marketing Performance?

This is probably the most common myth out there, and it’s completely rooted in a zero-sum view of data. The truth is, while a privacy-first approach might mean tweaking some short-term tactics, it pays off in huge long-term benefits for marketing.

By being transparent and respecting user choice, you build trust. Trust is the currency of the modern digital economy, leading to higher-quality engagement, stronger brand loyalty, and more valuable long-term customer relationships.

This approach forces a shift in focus from collecting the most data to collecting the right data from users who are happy to share it. That leads to a much more engaged and valuable audience, which ultimately drives up marketing ROI and helps you build a sustainable, respected brand people actually want to support.

Stop chasing data quality issues and start preventing them. Trackingplan provides a single source of truth for your analytics, giving you automated monitoring, real-time alerts for PII leaks and consent misconfigurations, and the power to enforce your privacy rules across your entire stack. See how you can build trust and ship with confidence at https://trackingplan.com.

%20copy.webp)

.webp)