Decisions are only as good as the data they're built on. When dashboards break, attribution models fail, and AI predictions go awry, the root cause is almost always the same: poor data quality. The consequences are significant, ranging from wasted marketing spend and skewed analytics to a complete erosion of stakeholder trust in your reporting. This isn't just an inconvenience; it's a fundamental business risk that undermines every data-dependent operation. The critical need for flawless data extends profoundly into data science initiatives, where accurate and reliable data forms the bedrock for all analytical efforts. In this environment, relying on flawed information isn't an option.

This guide is designed to help you find the best data quality tools to solve this problem permanently. We’ve moved beyond marketing claims to provide a comprehensive, practical analysis of the leading solutions on the market, from established enterprise platforms like Informatica and Talend to modern observability specialists like Trackingplan and Monte Carlo. Each entry includes a detailed breakdown with screenshots and direct links, covering:

- Key Features: What does the tool actually do?

- Ideal Use Cases: Who is this tool built for?

- Pros and Cons: An honest assessment of strengths and weaknesses.

- Implementation Complexity & Pricing: What will it take to get started?

Our goal is to give you a clear, actionable comparison to help your team select the right tool and build a foundation of data you can truly trust. Let's dive into the platforms that can transform your data from a liability into your most valuable strategic asset.

1. Trackingplan

Trackingplan distinguishes itself as a premier, fully automated data quality and analytics observability platform. It is engineered to provide a single source of truth for an organization’s entire analytics implementation across web, mobile, and server-side environments. Unlike traditional methods that depend on brittle, manually maintained test suites, Trackingplan operates on live user traffic. It automatically discovers and maps all analytics events, marketing pixels, and campaign tags, creating a dynamic, always-updated tracking plan.

This proactive approach allows it to serve as one of the best data quality tools for teams looking to move beyond reactive debugging. Its AI-driven engine detects anomalies in real time, from traffic spikes and drops to broken pixels and schema deviations. By identifying the root cause of an issue and delivering actionable alerts via Slack, email, or Microsoft Teams, it empowers marketing, analytics, and development teams to prevent data loss before it corrupts dashboards, skews attribution models, or wastes advertising budgets. The platform’s ability to prevent the business risks of poor data quality makes it an indispensable asset for any data-driven organization.

Key Features & Use Cases

- Automated Discovery & QA: Continuously monitors real user traffic to automatically build and validate your tracking plan, eliminating the need for manual audits.

- Real-Time Anomaly Detection: Instantly flags issues like traffic drops, broken marketing pixels (Google Ads, Facebook, TikTok), missing events, and schema violations.

- Root-Cause Analysis: Provides detailed context for every alert, explaining what broke, where, and why, significantly reducing time-to-resolution.

- Privacy & Compliance Hub: Monitors for potential PII leaks and consent misconfigurations, helping teams maintain data governance and reduce compliance risk.

- Broad Integrations: Offers seamless integration with major analytics platforms (Google Analytics, Adobe Analytics, Amplitude), tag managers, and data warehouses.

Implementation & Pricing

Installation: Implementation is exceptionally straightforward. It requires adding a lightweight (<10KB) asynchronous JavaScript tag to your website or integrating a small SDK for mobile apps. The process is designed to have minimal impact on site performance.

Pricing: Trackingplan does not list public pricing tiers. Prospective users need to sign up for a free trial or book a demo to receive a customized quote based on their traffic volume and specific needs.

AttributeDetailsIdeal ForE-commerce, SaaS, and digital agencies needing reliable analytics and marketing attribution.DeploymentCloud-based SaaS with a simple tag or SDK installation.ComplexityLow. The initial setup is fast, though it may take several days for the platform to learn traffic patterns on low-volume sites.CostCustom pricing. Requires a demo or trial for a quote.

Pros & Cons

Pros:

- Continuous, automated monitoring of live production traffic eliminates manual effort.

- Fast, actionable alerts with root-cause analysis reduce time-to-fix.

- Lightweight installation with broad platform integrations.

- Strong focus on privacy and compliance monitoring.

Cons:

- Lack of public pricing can slow down the initial evaluation process.

- For very low-traffic sites, initial data discovery can take up to a week.

2. Informatica – Data Quality and Observability (IDMC)

Informatica's offering within its Intelligent Data Management Cloud (IDMC) is an enterprise-grade solution designed for large, complex organizations. It extends beyond basic validation, providing a comprehensive suite of tools for data profiling, cleansing, standardization, and enrichment. The platform leverages its AI engine, CLAIRE, to automate rule discovery and suggest data quality improvements, which is a significant advantage for teams managing vast and diverse datasets across hybrid and multi-cloud environments. This focus on AI-driven automation and deep integration with its broader data governance and cataloging services makes it one of the best data quality tools for enterprises that require stringent compliance and have mature data management practices.

Key Features & Use Cases

- AI-Powered Rule Generation: Automatically creates data quality rules by profiling data, reducing manual effort and accelerating deployment.

- Comprehensive Data Cleansing: Offers rich, pre-built transformations for standardizing, de-duplicating, and enriching data, including address verification for over 240 countries.

- Continuous Data Profiling: Constantly monitors data pipelines to detect anomalies and data drift, ensuring quality is maintained over time.

- Ideal Use Case: A multinational financial institution can use Informatica to standardize customer data from various global systems, verify addresses for regulatory compliance, and ensure data lineage is tracked for audit purposes.

Platform Analysis

AspectAssessmentDeployment ComplexityHigh. Implementation requires significant planning and expertise, often involving professional services, reflecting its enterprise focus.Ideal Team SizeLarge enterprises with dedicated data governance and IT teams.Relative CostHigh. Pricing is consumption-based and can be complex, making it a substantial investment compared to more focused tools.Comparison to TrackingplanInformatica provides a much broader, all-encompassing data management platform for enterprise data lakes and warehouses. Trackingplan offers a more targeted, developer-first solution specifically for validating digital analytics and marketing event data at the source.ProsExtremely broad connectivity, robust security features, and a mature, highly respected ecosystem.ConsComplex pricing and packaging can be a significant barrier. The platform can be overkill and too resource-intensive for smaller organizations or teams with narrow data quality needs.

Website:https://www.informatica.com/products/data-quality.html

3. Talend by Qlik – Talend Data Quality

Talend Data Quality, now part of the Qlik ecosystem, is a solution that embeds data quality controls directly into data integration pipelines. It focuses on profiling, cleansing, and standardizing data as it moves through the Talend Data Fabric. The platform is designed for both technical and business users, offering a user-friendly interface with features like the Talend Trust Score to provide an at-a-glance assessment of data health. This approach makes it one of the best data quality tools for organizations already invested in the Talend or Qlik ecosystems, as it provides a unified environment for managing data from ingestion to analytics. Its strength lies in preventing bad data from entering downstream systems in the first place.

Key Features & Use Cases

- Interactive Profiling and Talend Trust Score:Provides an immediate, quantifiable measure of data health, helping teams prioritize data quality efforts.

- Real-time Cleansing and Validation: Embeds data quality rules directly into ETL/ELT jobs to correct and standardize information in-flight.

- Built-in PII Protection: Includes native data masking and encryption capabilities to protect sensitive data and support compliance initiatives like GDPR.

- Ideal Use Case: A retail company can use Talend to cleanse customer data from point-of-sale systems during ingestion, standardize addresses, and mask personally identifiable information before loading it into a centralized data warehouse for business intelligence.

Platform Analysis

AspectAssessmentDeployment ComplexityMedium. While the interface is user-friendly, integrating it deeply into complex data pipelines requires technical expertise in Talend Studio and data integration principles.Ideal Team SizeMid-to-large-sized companies with established data engineering teams.Relative CostHigh. Pricing is not publicly available and requires sales engagement, reflecting its enterprise positioning. The best value is achieved when purchased as part of the broader Talend Data Fabric platform.Comparison to TrackingplanTalend focuses on the quality of batch or streaming data within traditional data pipelines (ETL/ELT). Trackingplan is purpose-built for validating and governing event-based analytics data generated directly from websites and apps at the point of creation.ProsGood balance of powerful features and user-friendly design. Excellent for ensuring data health during ingestion and transformation processes.ConsThe platform delivers maximum value when used within the Talend/Qlik ecosystem, which may lead to vendor lock-in. Pricing is opaque and can be a significant investment.

Website: https://www.talend.com/products/data-quality/

4. Collibra – Data Quality & Observability (Cloud)

Collibra embeds data quality directly into its broader data intelligence and governance platform, making it a powerful choice for organizations prioritizing a governance-first approach. The cloud-native solution provides automated data profiling and monitoring, using machine learning to recommend data quality rules and detect anomalies proactively. Its standout feature is pushdown processing via Collibra Edge, which allows data quality computations to run within the customer's environment, enhancing security and performance. This tight integration with its data catalog and lineage tools makes Collibra one of the best data quality tools for businesses that need to contextualize quality issues within a complete data governance framework.

Key Features & Use Cases

- ML and GenAI-Assisted Rule Creation:Leverages AI to suggest and help build data quality rules in plain language, making it accessible to business users.

- Pushdown Processing: Collibra Edge enables data processing to occur directly within your cloud data warehouse, keeping sensitive data secure and minimizing data movement.

- Integrated Governance Context: Natively links data quality scores and issues to business glossaries, data catalogs, and lineage maps for end-to-end visibility.

- Ideal Use Case: A regulated healthcare organization can use Collibra to define data quality rules for patient records, monitor data feeds from various clinical systems, and automatically create remediation workflows with clear data ownership assigned through the governance platform.

Platform Analysis

AspectAssessmentDeployment ComplexityMedium to High. While cloud-based, configuring Collibra Edge and integrating it fully into a governance framework requires technical expertise and a structured rollout.Ideal Team SizeMedium to large enterprises with established data governance programs and cross-functional data stewardship teams.Relative CostHigh. Pricing is sales-driven and tailored to enterprise needs, representing a significant investment aimed at comprehensive data intelligence.Comparison to TrackingplanCollibra is a holistic data governance platform that includes data quality for enterprise data warehouses. Trackingplan is a specialized, developer-centric tool for ensuring the quality and accuracy of behavioral event data captured from websites and apps at the source.ProsGovernance-native approach provides clear roles and business context. Frequent cloud updates and a strong connector ecosystem.ConsHas a notable learning curve for administrators. The premium, sales-driven pricing model may not be suitable for teams with smaller budgets or scopes.

Website: https://www.collibra.com/resources/collibra-data-quality-observability

5. Ataccama – ONE Data Quality (and Snowflake Native App)

Ataccama ONE offers a comprehensive, AI-powered data quality fabric designed for end-to-end data management, from monitoring and validation to cleansing and remediation. It stands out with its no-code transformation plans and AI-assisted rule authoring, making sophisticated data quality accessible to less technical users. A key differentiator is its flexible deployment, offering a full enterprise platform or a lightweight, free Snowflake Native App. This dual offering makes it one of the best data quality tools for organizations at different maturity levels, especially those heavily invested in the Snowflake ecosystem who want to start with quick, in-database checks before scaling.

Key Features & Use Cases

- Centralized Rule Library: Build, manage, and reuse data quality rules across the entire organization, ensuring consistent standards and pushdown processing for efficiency.

- AI-Assisted Transformations: Leverages AI to suggest cleansing rules and transformations, significantly speeding up the process of data remediation.

- Snowflake Native App: Provides a free, easy-to-deploy option for running predefined data quality checks directly within a Snowflake instance without data movement.

- Ideal Use Case: A retail company using Snowflake can leverage the Native App to quickly profile and validate inventory data. As their needs grow, they can adopt the full Ataccama platform to create custom cleansing rules for customer records and integrate with dbt for quality checks within their transformation pipelines.

Platform Analysis

AspectAssessmentDeployment ComplexityVaries. The Snowflake Native App is very low, deployable in minutes from the marketplace. The full platform is high, requiring a planned enterprise implementation.Ideal Team SizeMedium to large data teams for the full platform; smaller teams or individuals can start with the Snowflake Native App.Relative CostVaries. The Snowflake app is free. The full platform is high-cost and requires engaging with the sales team for a custom quote.Comparison to TrackingplanAtaccama is focused on enterprise data warehouse and data lake quality, handling large-scale batch and streaming data. Trackingplan is a specialized tool for validating customer event data from web and mobile apps at the point of collection, preventing bad data from entering any system.ProsStrong automation and remediation capabilities. Flexible deployment options allow for a "start small, scale up" approach.ConsFull platform pricing is opaque and geared towards large enterprises. The free Snowflake app has limitations on custom rule creation, which requires upgrading.

Website: https://www.ataccama.com/platform/data-quality

6. Monte Carlo – Data + AI Observability

Monte Carlo is a prominent data observability platform designed to bring reliability to data and AI systems. It focuses on automatically monitoring data freshness, volume, distribution, and schema across data warehouses, lakes, and BI tools. The platform excels at preventing "data downtime" by detecting anomalies and using end-to-end lineage to pinpoint the root cause of an issue, from ingestion to analytics. This focus on lineage-aware incident triage and broad pipeline coverage makes it one of the best data quality tools for modern data teams looking to proactively manage data health and reduce the time to resolution for data incidents.

Key Features & Use Cases

- End-to-End Data Lineage: Provides a comprehensive view of data pipelines, allowing teams to quickly trace data issues upstream or downstream to understand their impact.

- Automated Anomaly Detection: Uses machine learning to monitor key data quality metrics without requiring manual rule configuration, alerting teams to unexpected changes.

- Broad Coverage: Integrates with a wide range of sources, including warehouses, data lakes, BI tools, and even GenAI pipelines, offering a centralized observability solution.

- Ideal Use Case: An e-commerce company can use Monte Carlo to monitor its core sales data pipeline. If a key dashboard shows incorrect revenue figures, the team can use its lineage feature to instantly identify a broken transformation upstream, resolve it, and notify stakeholders, all within the platform.

Platform Analysis

AspectAssessmentDeployment ComplexityModerate. Setup is primarily code-based and well-documented, allowing for relatively quick integration with major data platforms, though full enterprise adoption requires planning.Ideal Team SizeMid-sized to large data teams that manage complex data ecosystems and prioritize proactive incident management.Relative CostHigh. Pricing is usage-based on a credit model. While transparent, it requires careful planning to forecast and manage spend effectively.Comparison to TrackingplanMonte Carlo provides broad observability for the data warehouse and BI layer, focusing on data at rest. Trackingplan is a source-level validation tool, ensuring event data is correct before it enters the pipeline, offering a complementary, proactive layer of quality.ProsMature, well-adopted product with powerful lineage capabilities and transparent, credit-based pricing examples. Strong security options like PrivateLink.ConsPrimarily focused on observability and alerting rather than heavy-duty automated remediation. The credit-based cost model can be difficult to predict without historical usage data.

Website: https://www.montecarlodata.com/request-for-pricing/

7. Great Expectations (GX) – GX Cloud and GX Core

Great Expectations (GX) has established itself as the open-source standard for data testing, empowering teams to declare "Expectations" about their data in a clear, human-readable format. It consists of the open-source GX Core library for defining and validating data against these expectations, and the managed GX Cloud platform for collaboration, governance, and observability at scale. This dual offering makes it one of the best data quality tools for teams that want to start small and developer-first, with a clear and scalable path to enterprise-wide data governance. GX's strength lies in its declarative API and its vibrant community, which provide a powerful foundation for building reliable data pipelines.

Key Features & Use Cases

- Declarative "Expectations": Define data quality tests as simple, declarative rules (e.g.,

expect_column_values_to_not_be_null) that are easy to understand and maintain. - Automated Profiling & Documentation:Automatically generates a suite of Expectations by profiling data and produces "Data Docs," which are human-readable quality reports.

- GX Cloud for Collaboration: The managed service adds a UI for monitoring, collaboration, and governance, removing the need for teams to manage their own infrastructure.

- Ideal Use Case: A data engineering team can integrate GX Core directly into their dbt or Airflow pipelines to validate data transformations in development. As the organization grows, they can adopt GX Cloud to centralize expectation suites and provide visibility to business stakeholders.

Platform Analysis

AspectAssessmentDeployment ComplexityLow (GX Core) to Medium (GX Cloud). The open-source library is easy to start with via a pip install. GX Cloud is a SaaS platform that simplifies deployment significantly.Ideal Team SizeFrom individual data practitioners and small teams (GX Core) to large, collaborative enterprises (GX Cloud).Relative CostLow to High. GX Core is free and open-source. GX Cloud offers a free developer tier, with paid tiers for team collaboration and enterprise features.Comparison to TrackingplanGreat Expectations is a general-purpose data quality framework for batch data in warehouses and data lakes. Trackingplan is a specialized, real-time solution for validating streaming event data from websites and apps before it ever reaches a destination.ProsExtremely flexible with a strong open-source community. Low barrier to entry with the free tier and a clear upgrade path from developer workflows to enterprise governance.ConsRequires significant DIY effort and infrastructure management if using only the open-source core. The most valuable governance and collaboration features are locked behind paid GX Cloud tiers.

Website: https://gxcloud.com/

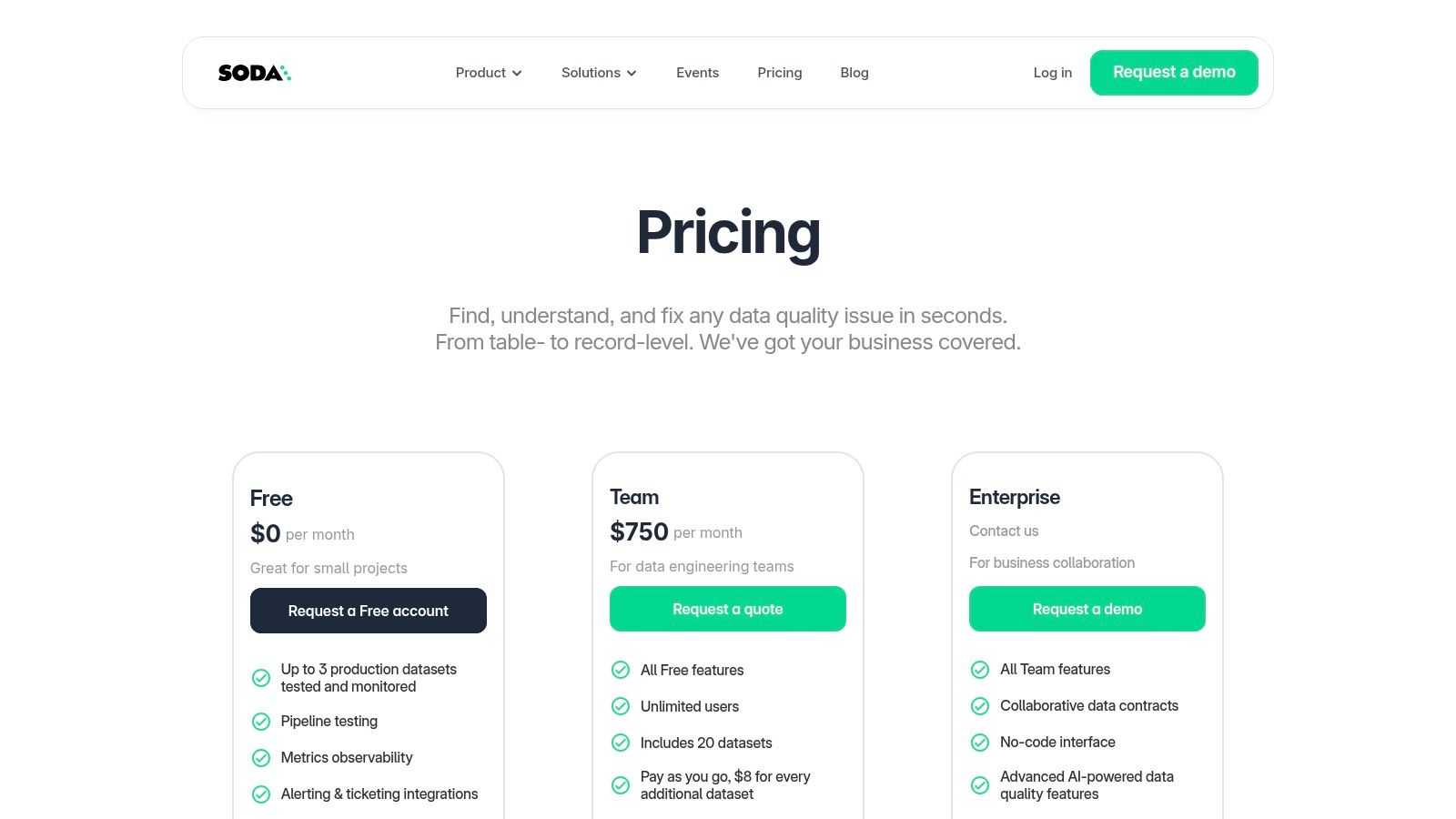

8. Soda – Soda Cloud (Data Quality platform)

Soda Cloud is a modern data quality and observability platform designed for accessibility and quick implementation, making it an excellent choice for growing data engineering and analytics teams. It focuses on providing a collaborative, code-based, and no-code environment for defining, monitoring, and resolving data quality issues directly within data pipelines. By offering transparent and usage-based pricing, including a generous free tier, Soda lowers the barrier to entry for organizations looking to establish a foundational data quality practice. This approach, combined with broad integrations into the modern data stack, establishes it as one of the best data quality tools for teams prioritizing rapid value and scalability.

Key Features & Use Cases

- Declarative Checks with SodaCL: Uses a human-readable language (Soda Checks Language) to define data quality rules, making tests easy to write, read, and maintain.

- No-Code Interface & Data Contracts:Empowers both technical and non-technical users to set quality expectations and establish formal data contracts for key datasets.

- Incident Management & Alerting: Integrates with tools like Slack and PagerDuty to provide immediate notifications and a clear workflow for resolving data issues.

- Ideal Use Case: A fast-growing e-commerce startup can use Soda's free tier to monitor its core product and sales datasets, setting up freshness and schema checks to ensure its analytics dashboards are always reliable as the business scales.

Platform Analysis

AspectAssessmentDeployment ComplexityLow. Designed for a quick start, especially for teams familiar with the modern data stack. The free tier and clear documentation facilitate fast onboarding.Ideal Team SizeSmall to medium-sized data teams, or larger organizations looking for a self-serve, decentralized data quality solution.Relative CostLow to Medium. Offers a free tier and a pay-as-you-go plan based on the number of datasets, which is highly accessible and predictable for scaling teams.Comparison to TrackingplanSoda focuses on monitoring data quality within the data warehouse and during transformation pipelines. Trackingplan is positioned further upstream, validating event data at the point of collection to prevent bad data from entering the warehouse in the first place.ProsVery accessible pricing model and a quick time-to-value. The combination of code-based and no-code interfaces serves a broad range of user skill levels.ConsDataset-based billing requires careful monitoring to manage costs as usage grows. Some advanced AI and enterprise governance features are reserved for higher-tier plans.

Website: https://www.soda.io/pricing

9. Anomalo – Data Quality Monitoring

Anomalo is a data quality platform that uses unsupervised machine learning to automatically monitor enterprise data warehouses and lakes. Instead of relying solely on user-defined rules, it learns the historical patterns and structure of your data to detect unexpected issues like missing data, sudden shifts in distributions, and other subtle anomalies. This AI-driven approach helps teams identify "silent" data errors that would otherwise go unnoticed, making it one of the best data quality tools for organizations that need deep, automated table-level monitoring without extensive manual configuration. Its focus on root-cause analysis and no-code setup makes it accessible to both technical and business users.

Key Features & Use Cases

- Unsupervised ML Monitoring: Automatically learns what normal data looks like and alerts on deviations without needing pre-defined rules for every scenario.

- Root-Cause Exploration: Provides tools to drill down into the specific rows or segments of data that are causing a quality issue, accelerating resolution.

- Cloud Marketplace Procurement: Can be procured directly through cloud marketplaces like AWS, simplifying the purchasing and billing process for existing cloud customers.

- Ideal Use Case: An e-commerce company can use Anomalo to monitor key sales and inventory tables, automatically detecting if a software update causes a sudden drop in product prices or if a data pipeline silently stops delivering data from a specific region.

Platform Analysis

AspectAssessmentDeployment ComplexityModerate. The no-code setup simplifies configuration, but integration with large-scale data warehouses requires data engineering involvement.Ideal Team SizeMedium to large data teams that manage critical datasets in a modern data stack.Relative CostHigh. Pricing is not public and is geared toward enterprise contracts, making it a significant investment.Comparison to TrackingplanAnomalo focuses on monitoring data at rest within the data warehouse, detecting anomalies after the data has landed. Trackingplan validates event data at the source, preventing bad data from ever entering the warehouse in the first place.ProsPowerful detection of subtle and unknown data issues, easy procurement path via AWS Marketplace, and intuitive UI for investigating anomalies.ConsEnterprise-oriented pricing can be a barrier for smaller companies. Its functionality may overlap with broader data observability platforms, creating redundancy in some tech stacks.

Website: https://www.anomalo.com/

10. Bigeye – Data Observability

Bigeye is a data observability platform designed to help data teams build trust in their data by continuously monitoring for quality issues. It focuses on automating the detection of anomalies across freshness, volume, format, and data distributions, allowing teams to catch problems before they impact downstream dashboards or models. By providing out-of-the-box monitoring and actionable alerting, Bigeye reduces the manual toil of writing data quality tests. Its availability on the Google Cloud Marketplace simplifies procurement, making it an accessible option for teams already embedded in the GCP ecosystem who are looking to quickly deploy a robust monitoring solution.

Key Features & Use Cases

- Automated Quality Monitoring: Applies machine learning to automatically monitor key quality metrics like freshness, volume, and schema without requiring manual threshold setting.

- Root Cause Analysis: Integrates lineage to help teams quickly trace data issues from a broken report back to the source table or pipeline job that caused it.

- Cloud Marketplace Procurement: Available directly through the Google Cloud Marketplace, allowing for consolidated billing and faster, more streamlined purchasing.

- Ideal Use Case: An e-commerce data team can use Bigeye to automatically monitor sales data tables in their data warehouse, receiving alerts if an ETL job is delayed (freshness) or if a data load contains an unusually low number of transactions (volume anomaly).

Platform Analysis

AspectAssessmentDeployment ComplexityModerate. Setup is relatively straightforward, especially for teams on supported data warehouses, with a focus on quick time-to-value.Ideal Team SizeSmall to mid-sized data teams or larger enterprises looking for a dedicated observability tool.Relative CostMid-to-High. Pricing is not public and requires contact with sales or a marketplace inquiry, suggesting a premium solution tailored to specific needs.Comparison to TrackingplanBigeye provides observability for data at rest within data warehouses and lakes. Trackingplan focuses on observability at the source, validating event data in real-time before it is ever collected or sent to a warehouse.ProsSimplified procurement via Google Cloud Marketplace. Strong automated monitoring capabilities that reduce manual test writing.ConsPricing is not transparent. The platform is primarily focused on monitoring and detection, with limited native data cleansing or remediation features.

Website: https://www.bigeye.com/

11. AWS Marketplace – Data Quality Solutions

AWS Marketplace isn't a single data quality tool, but rather a digital catalog that simplifies finding, purchasing, and deploying third-party data quality software directly within the AWS ecosystem. It acts as a central procurement hub, allowing organizations already invested in AWS to use their existing billing and procurement channels to acquire solutions from various vendors. This approach is ideal for teams looking to compare different data quality tools, from comprehensive platforms to specialized accelerators, without navigating separate legal and purchasing processes for each one. The ability to discover, test, and deploy software through a unified interface makes it a strategic choice for streamlining technology acquisition and management.

Key Features & Use Cases

- Consolidated AWS Billing: Purchase and manage subscriptions for multiple data quality tools through a single AWS bill, simplifying budget management.

- Wide Vendor Selection: Access a broad range of solutions, from ML-driven anomaly detection to rule-based validation frameworks, all in one place.

- Streamlined Procurement: Expedites legal and procurement cycles for approved vendors and supports private offers for custom pricing and terms.

- Ideal Use Case: An enterprise with a cloud-first strategy can use the AWS Marketplace to quickly trial and deploy a data quality solution for their Amazon Redshift data warehouse, leveraging their existing AWS enterprise agreement for a faster rollout.

Platform Analysis

AspectAssessmentDeployment ComplexityVaries. Deployment ranges from simple SaaS subscriptions to complex configurations via AMIs or containers, depending entirely on the chosen vendor's product.Ideal Team SizeAny team operating within the AWS ecosystem, from small startups to large enterprises, that wants to simplify software procurement.Relative CostVaries. Pricing models differ by vendor and include free trials, hourly/annual subscriptions, and private offers. Cost is determined by the specific tool selected.Comparison to TrackingplanThe AWS Marketplace is a procurement platform where one might find various data quality tools. Trackingplan is a specific, developer-focused product for ensuring event data quality at the source and would be one type of specialized solution a team might look for.ProsSimplifies purchasing and renewals through a single channel. Excellent for discovering and comparing multiple data quality options and vendor offerings.ConsThe quality and detail of vendor listings can be inconsistent. Many powerful tools only provide pricing through private quotes, requiring direct sales contact.

Website: https://aws.amazon.com/marketplace/

12. Avo – Event Tracking Plan & Observability

Avo is a platform designed to ensure the quality and governance of event tracking at the source, helping product, analytics, and marketing teams define, validate, and monitor events and properties across all apps and websites with a consistent, collaborative tracking plan.

Why it matters

Avo allows teams to establish a centralized, collaborative tracking plan (not just a spreadsheet) and validate that implemented events match that plan, catching issues such as missing events, inconsistent properties, or schema errors before the data reaches analytics tools.

Key Features & Use Cases

Tracking Plan Management: Define and collaborate on a centralized event plan with rules and quality standards, preventing inconsistencies across platforms and tools.

Avo Inspector: Monitor production instrumentation in real time to detect discrepancies between defined events and actual implementation.

Real-Time Alerts & Validation: Set up alerts for tracking plan violations (missing properties, wrong types, unexpected events) and notify teams via Slack or other channels.

Codegen & Schema Sync: Generate type-safe event instrumentation code and synchronize schemas with tools like Segment Protocols, Amplitude Govern, or Mixpanel Lexicon.Ideal Use Case Product or analytics teams who want to move away from tracking spreadsheets and need a collaborative, validated, and audited source of truth for web and app events before that data impacts dashboards or attribution models.

Deployment & Pricing

Avo offers a free plan with limits on events and basic tracking plan + Inspector functionality, as well as team and enterprise plans with advanced collaboration, alerts, and continuous quality control.

Top 12 Data Quality Tools Comparison

Your Action Plan: Choosing the Right Data Quality Tool for Your Team

Navigating the landscape of the best data quality tools can feel overwhelming, but making the right choice is crucial for building a data-driven culture founded on trust. As we've explored, the market offers a diverse range of solutions, from enterprise-grade platforms like Informatica and Collibra designed for complex data governance to developer-centric open-source options like Great Expectations. Your ideal tool depends entirely on your specific context, team structure, and primary data challenges.

The key takeaway is that data quality is not a one-size-fits-all problem. A solution like Monte Carlo excels at providing end-to-end observability for data engineering pipelines, while a tool like Trackingplanis purpose-built to solve the often-overlooked but critical issues plaguing customer-facing analytics and marketing data. Understanding this distinction is the first step toward a successful implementation.

From Evaluation to Implementation: Your Next Steps

To move from analysis to action, you need a structured evaluation process. Don't get distracted by endless feature lists; instead, focus on the core problems you need to solve right now and the strategic capabilities you'll need in the future.

Follow these steps to make a confident decision:

- Define Your Primary Use Case: Are you trying to fix broken dashboards for your marketing team? Or are you ensuring data integrity within a massive Snowflake data warehouse? Your answer will immediately narrow the field. If your pain point is inaccurate analytics from your website or mobile apps, a specialized tool like Trackingplan is a far better fit than a general-purpose data catalog.

- Assess Your Team's Skillset: Be realistic about the technical resources at your disposal. A solution like Great Expectations offers immense power but requires significant engineering expertise to manage. Conversely, platforms like Soda or Trackingplan offer a much faster time-to-value with less implementation overhead, making them accessible to a broader range of teams.

- Map to Your Existing Tech Stack: The best data quality tool is one that integrates seamlessly into your current environment. Check for native connectors to your data warehouse (e.g., Snowflake, BigQuery), ETL/ELT tools, and business intelligence platforms. A tool that creates more friction than it resolves will never achieve full adoption.

Creating Your Decision Matrix

To formalize your evaluation, create a simple decision matrix. This will help you objectively compare your shortlisted candidates and present a clear recommendation to stakeholders.

Score each potential tool on a scale of 1-5 across these critical dimensions:

- Business Impact: How effectively does it solve your primary pain point?

- Implementation Complexity: How much time and engineering effort is required to get started?

- Ease of Use: Can non-technical users (like analysts or marketers) leverage the platform?

- Integration Capabilities: How well does it connect with your existing stack?

- Total Cost of Ownership: Consider not just the subscription fee but also implementation, training, and maintenance costs.

- Scalability: Will the tool grow with your data volume and organizational needs?

By using this structured framework, you transform a complex decision into a clear, evidence-based choice. Selecting one of the best data quality toolsis more than a technical purchase; it's a strategic investment in the reliability and accuracy of every data-driven decision your organization makes. The right platform will empower your teams, foster trust in your data, and unlock the true potential of your analytics initiatives.

If your biggest data quality headaches stem from incomplete or inaccurate customer analytics, Trackingplan is designed to solve that specific problem. Our platform automatically validates your marketing and product analytics implementation, alerting you before bad data pollutes your dashboards and reports. See how Trackingplan can bring clarity and trust back to your most critical business metrics.